Key Takeaways

TL;DR:

There’s no single “best” clinical trial software because clinical research is too complex and specialized. The industry has a number of different software categories (CTMS, EDC, eTMF, RTSM, etc.), each solving distinct problems.

“Best” lists are more about clickbait than comprehensive information. They mislead by comparing incompatible tools.

Success depends on choosing the right software for your specific needs: your organization’s size, trial phase, regulatory requirements, and integration capabilities. Focus on fit-for-purpose selection based on interoperability, compliance, user experience, scalability, and total cost of ownership, not popularity rankings.

The goal isn’t finding one perfect tool, but building an integrated ecosystem of software that work together for your unique workflow.

Table of Contents

The Illusion of “Best”

Search online for “Best Clinical Trial Software,” and you’ll find a familiar pattern: listicles, rankings, and “top 10” guides claiming to reveal the ultimate platform for modern research teams. These articles attract clicks, but they rarely deliver unbiased or comprehensive information. These lists oversimplify a landscape built on specialization.

They group unlike systems together as if they’re competing for the same purpose when, in reality, each solves a different operational problem. A system designed for randomization (RTSM) cannot replace an EDC. A CTMS cannot substitute for an eTMF. A patient payment solution cannot do what a regulatory submission tool does.

Each software type operates in its own domain, governed by its own rules, data structures, and compliance requirements. Comparing them as though they compete on the same plane is like ranking airplanes, trucks, and submarines by “best vehicle.”

The problem isn’t just exaggeration. It’s that the entire premise of a single “best” clinical trial software solution is flawed. Clinical research is too complex, too regulated, and too specialized for one-size-fits-all answers.

Here’s the truth that all buyers know: there is no single “best” clinical trial software.

Every organization’s “best” is different. The right solution depends on your role in the research ecosystem, the phase and scale of your trials, your data complexity, and your internal capabilities. In reality, a sponsor’s “best” EDC system might be too complex for a single-site study, while a startup’s favorite lightweight CTMS could lack the audit trail depth required for global Phase III operations. What works for a global sponsor running 300 multicenter studies is not the same technology required for a lean biotech preparing for its first Phase I trial. There is no universal hierarchy, only fit-for-purpose solutions.

The constant focus of “best” leads organizations to chase popularity over fit.

The result: expensive implementations, frustrated teams, and systems that never quite match the workflow they were meant to improve.

The notion of “best” collapses even further when you realize how many types of software actually power the clinical research ecosystem.

Software in clinical research isn’t a single category. It’s a web of specialized systems that evolved to address distinct challenges: data capture, quality management, logistics, patient engagement, and more. That complexity is both the beauty and the burden of the industry’s technology stack.

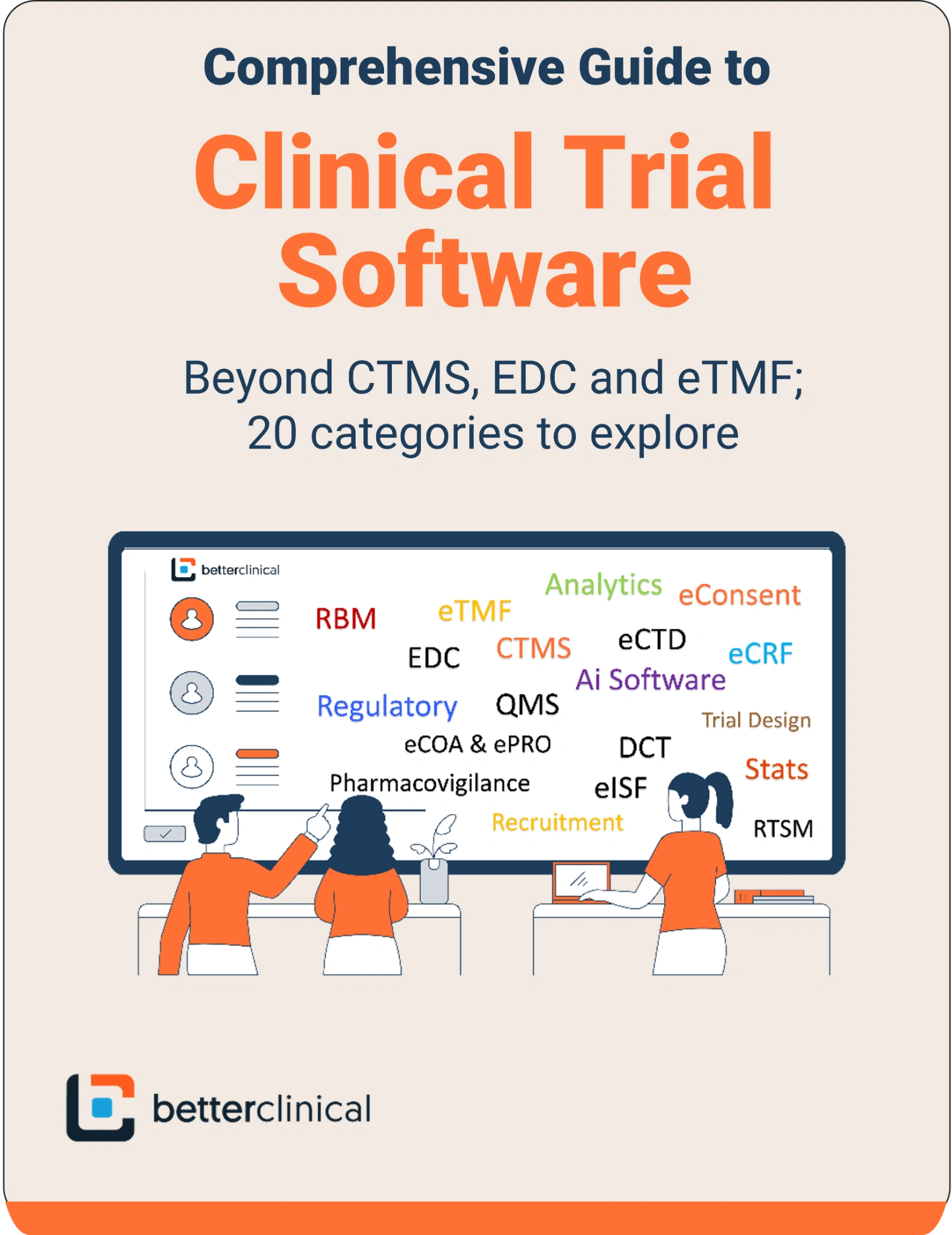

The Clinical Trial Software Landscape

To understand why “best” lists are misleading, you have to see the ecosystem as a whole. Clinical research software spans several interdependent categories, each with its own role in the life cycle of a study.

Below is a sample of 20 categories of clinical trial software that defines this landscape:

- Clinical Trial Management System (CTMS) – The operational hub that tracks study milestones, tasks, sites, budgets, and performance.

- Electronic Data Capture (EDC) – Digital system for collecting and validating clinical trial data at the source.

- Electronic Trial Master File (eTMF) – Central repository for all trial documents, version control, and inspection readiness.

- Regulatory Software – Tools to manage regulatory obligations, submissions, compliance, and correspondence.

- Randomization & Trial Supply Management (RTSM / IRT) – Automates randomization logic, drug supply, and patient allocation logistics.

- eConsent – Digital workflow for capturing, tracking, and auditing informed consent electronically.

- eCOA / ePRO – Electronic collection of patient or clinician-reported outcomes and assessments.

- Clinical Analytics – Dashboarding and analytical tools that turn raw trial data into operational insight.

- Decentralized Clinical Trials (DCT) Software – Platforms that support hybrid or fully remote trial execution (wearables, telehealth, remote data capture).

- Patient Recruitment Software – Systems designed to identify, engage, and enroll eligible participants.

- AI Software for Clinical Trials – Machine learning and automation tools for anomaly detection, predictions, protocol optimization, etc.

- Pharmacovigilance Software – Tools for monitoring, managing, and reporting adverse events and drug safety signals.

- Quality Management System (QMS) – System to manage QA/QC processes, deviations, CAPAs, audits, and compliance oversight.

- Electronic Investigator Site File (eISF) – Digital site-level binder for managing site documentation, training records, and site-side compliance.

- Clinical Trial Design Software – Tools for protocol creation, study modeling, simulation, and feasibility planning.

- Electronic Case Report Form (eCRF) – Digital versions of the case report forms used within EDC systems (form design and data capture).

- Risk-Based Monitoring / Quality (RBM / RBQM) – Software that drives monitoring focus based on risk metrics and statistical triggers.

- Electronic Common Technical Document (eCTD) – Systems that structure, compile, and manage regulatory submission dossiers.

- Statistical Analysis Software – Analytics engines for computations, modeling, inferential statistics, and data displays.

- Clinical Trial Patient Payments – Platforms to manage participant payments, reimbursements, tracking, and compliance.

There are probably more categories that can be added to this list. However, this is a good place to start. Taken together, these 20 categories show why a “best software” claim is meaningless.

Each category addresses a unique phase or pain point in the trial process. Success comes not from picking the top-ranked product, but from assembling the right mix of interoperable systems for your operational goals.

Each of these categories solves a very different problem. Each can have multiple “best” tools depending on context. And all of them, together, form the true digital infrastructure of modern clinical research.

Fit-for-Purpose

Instead of asking “What’s the best software system?”, the smarter question is “What’s the right software system for our current needs and future plans?”

For example:

A small biotech with limited staff might value simplicity and vendor-managed hosting over full configurability.

A large sponsor might prioritize system interoperability, audit logs, and API integration with data warehouses.

A CRO might need multi-sponsor flexibility, rapid onboarding, and regulatory documentation tools.

The right choice often depends less on what the software can do, and more on how well it supports your operating model.

When every vendor claims to be the best, the real differentiators come down to a consistent set of decision factors that hold true across categories. A fit-for-purpose decision considers the following:

1. Organization Scale

A global sponsor may require enterprise-grade CTMS and RBQM integrations; a smaller biotech may need lightweight, turnkey tools.

2. Study Design

Adaptive or decentralized trials demand flexibility, real-time monitoring, and remote data capture.

3. Interoperability

How easily can the software exchange data with your EDC, CTMS, or analytics tools?

APIs, CDISC conformance, and support for data exchange standards determine whether your tech stack works in unison or isolation.

4. Compliance and Validation

Clinical software must be validated and compliant with standards such as GxP, 21 CFR Part 11, and regional privacy laws. Regulators care less about the brand and more about documentation, traceability, and validation evidence. Always verify audit trails, access controls, and validation reports.

5. User Experience

A powerful system that frustrates users will slow down your study. Ease of use, training availability, and intuitive workflows matter far more than feature lists.

6. Scalability

The right system should grow with your study pipeline. A tool that fits your Phase I trial must also scale to multi-country Phase III if needed. Evaluate performance under data load, user volume, and regional expansion.

7. Data Quality and Analytics

Good tools enable proactive monitoring, not just data entry.

Look for systems that make insights accessible and auditable, whether for quality oversight, risk management, or submission readiness.

8. Support and Vendor Stability

Strong technology is only as reliable as the team behind it.

Evaluate vendor responsiveness, roadmap transparency, and validation documentation, especially in regulated environments.

9. Total Cost of Ownership

Licensing is only part of the picture.

Consider configuration time, training, hosting, integrations, and long-term maintenance when comparing solutions. True ROI comes from reducing rework, audit risk, and delays, not just license discounts.

When you strip away marketing claims, these are the attributes that determine whether a solution truly fits your needs.

Why Ecosystem Thinking Beats Individual Tools

Clinical research success increasingly depends on how systems work together, not which single system dominates.

The best-run organizations view their software not as isolated products but as interconnected capabilities, each serving a purpose within a larger operational framework.

A well-integrated ecosystem enables:

- Seamless data flow between EDC, CTMS, and eTMF.

- Real-time oversight through RBQM and analytics.

- Faster site activation and monitoring through startup and site management tools.

- Better patient experience via eConsent, eCOA, and payment automation.

When your ecosystem works as one, “best” becomes irrelevant.

The goal is not to find one tool that does everything but to assemble the right stack for your workflow, risk model, and regulatory context.

Common Pitfalls in Software Selection

Despite the sophistication of the industry, many organizations still fall into predictable traps:

Buying what’s popular: Mistaking market dominance for suitability.

Over-engineering early: Deploying enterprise-scale tools before establishing processes.

Ignoring integration costs: Underestimating the resources needed to connect systems.

Skipping validation: Assuming cloud-based equals compliant.

Neglecting user adoption: Forgetting that even the best tools fail if no one wants to use them.

Avoiding these pitfalls requires a mindset shift, from purchasing features to investing in fit and process maturity.

Summary: Clarity Over Clickbait

The pursuit of the “best” clinical trial software is a distraction. The clinical research software landscape is broad, nuanced, and essential.

Calling any tool “the best” is like calling one instrument “the best” in an orchestra. Each plays a role in the created harmony.

Pharma, biotech, and CRO leaders must reject simplistic rankings and embrace fit-for-purpose thinking. The path to efficiency and compliance isn’t paved with “top 10” lists; what truly matters is alignment between system, workflow, regulation, and strategy.

Every organization has its own definition of “best”:

For some, it’s usability and speed. For others, scalability and integration. For global players, compliance and automation.

The right choice isn’t the tool with the most awards or reviews. It’s the one that makes your operations safer, faster, and more resilient.

In clinical research, the right tool is not about chasing the best, it’s about building the right stack for your operation.

The Clinical Trial Software Landscape & Directory

We built the clinical trial software landscape and directory listings to help with category classification and vendor selection. Hopefully these tools allow sponsors, CROs, and technology leaders to explore the full landscape systematically without implying rankings or endorsements.

Instead of asking “What’s the best CTMS?”, teams can ask, “Which CTMS aligns with our data model, region, and resource capacity?”

That’s a fundamentally stronger question, one that leads to smarter and more compliant decisions.

The Clinical Trial Software Landscape

Review a comprehensive look at the clinical trial software vendor ecosystem, in infographic format

FAQ

Because each type of clinical trial software serves a specific function. What works well for a large sponsor managing global Phase III studies may be overly complex for a small biotech running a single Phase I trial. The right choice always depends on context such as organization size, study design, and operational maturity, not rankings or popularity.

Start by defining the operational problem you need to solve, then map it to the correct software category. Evaluate tools for organizational scale, study design, interoperability, validation, usability, scalability, and total cost of ownership. Pilot the shortlisted systems before full deployment to confirm fit and integration quality.

Fit-for-purpose means selecting technology that aligns with your specific workflows, team capacity, and regulatory environment. Instead of chasing the most popular platform, organizations should focus on systems that integrate smoothly, meet compliance standards, and genuinely support their study goals.

The most common pitfalls include: buying what’s popular instead of what fits your needs, over-engineering with enterprise tools before establishing proper processes, underestimating integration costs between systems, assuming cloud-based automatically means compliant, and neglecting user adoption by choosing tools that are too complex. Success requires shifting from purchasing features to investing in the right fit for your situation.